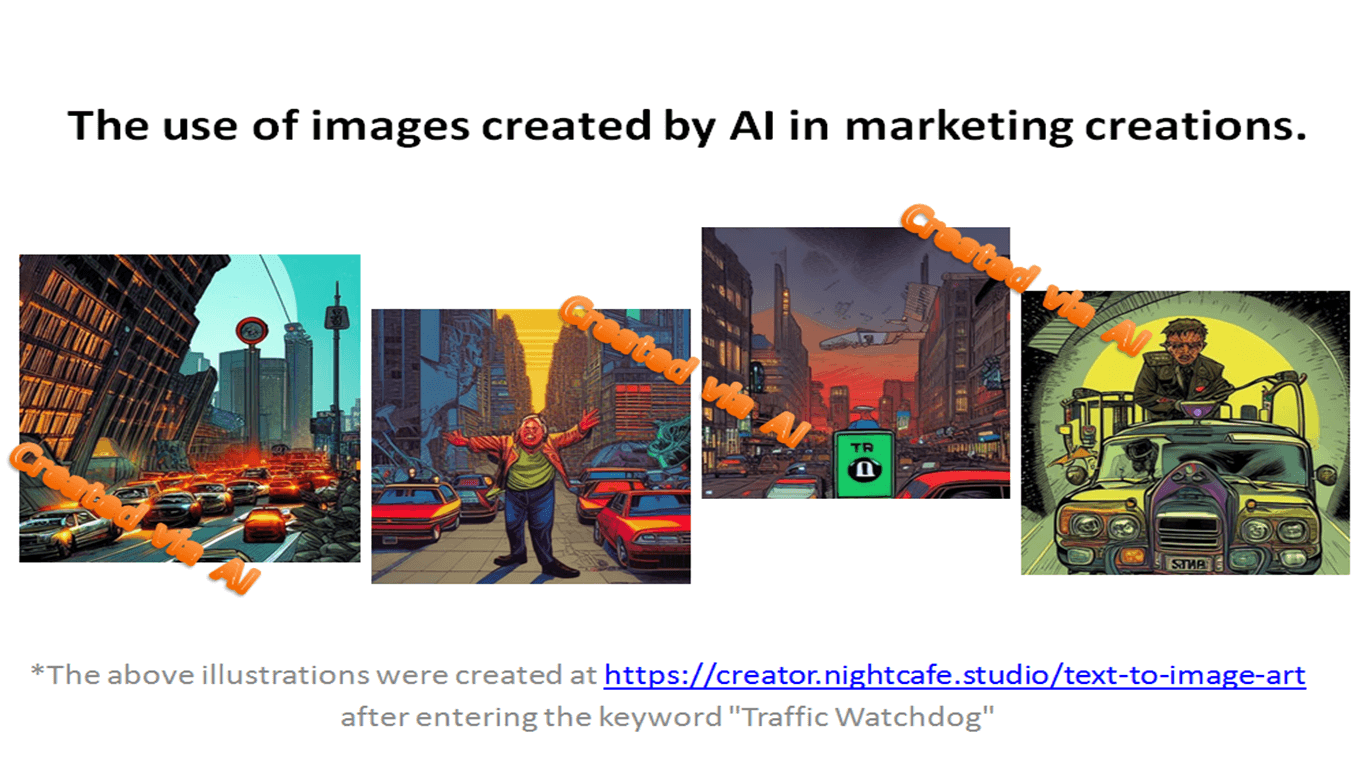

The use of images created by AI in marketing creations

source: own elaboration

Recently, the subject of Text-to-image AI models has become very popular. There are more and more programs and applications that use them on the market, and computer-created graphics find many applications. But like everything related to the subject of AI, the new functionality of machines causes many controversies. Is it possible that computers not only calculate for us, but also create works of art in our behalf?

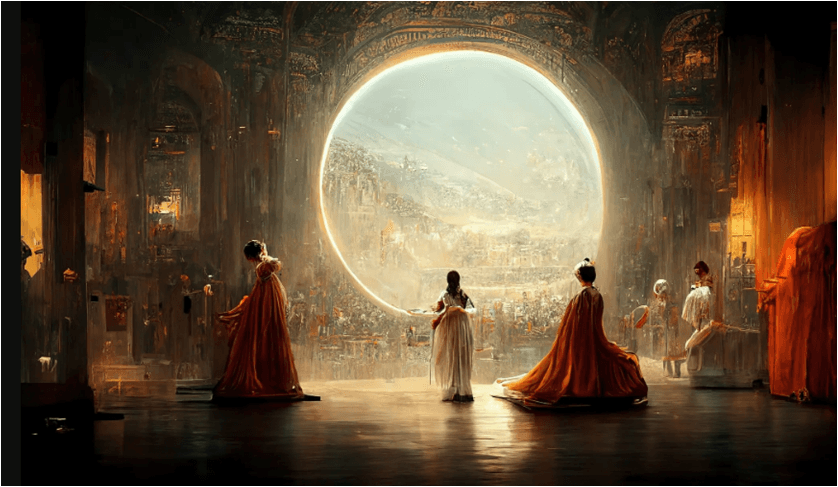

Jason Allen, a professional video game designer, spent approximately 80 hours creating „Theatre D’opera Spatial” (pictured below), which won the Colorado State Fair’s art competition in the „digital arts/digitally manipulated photography” category. It wouldn’t be surprising if it wasn’t for the fact that after winning, the author of the winning work revealed that the graphics were created using the Midjourney program, which uses Text-to-image artificial intelligence. This means that the painting was created thanks to an algorithm which, on the basis of the database stored in it and the relevant keywords selected by Mr. Allen, designed the work. And not only „Theatre D’opera Spatial”, because as a result of the query, over 100 graphics were returned. From among them, the winner of the competition chose three, including the already mentioned one. Of course, it wasn’t just about taking part in the competition (the prize was reportedly a staggering $300), but about publicizing the „Space Opera Theater” project, of which these graphics will be a part. Although the organizers of the competition had no objections, and the rules allowed for all possible „artistic practice that uses digital technology as part of the creative or presentation process”, a heated discussion broke out online. Because who is the author of the picture? Programmers who created the algorithm based on which Midjourney works, artists whose works are the database on which the program is based or maybe Mr. Allen, who was the author of the idea and devoted his time to choose the right parameters, including specific keywords? The topic is too fresh to be legally regulated, but just in case, the work was signed „Jason M. Allen via Midjourney”.

Image 1. Jason M. Allen via Midjourney - Theatre D’opera Spatial

Similar models are already used in marketing - in June this year, Cosmopolitan magazine presented the first magazine cover (shown below) created by artificial intelligence used in the DALL-E 2 program. This program is available as part of OpenAI, and its name is a combination of the name of the surrealist painter Salvador Dali and the character of the friendly robot WALL-E from Pixar. On the cover, apart from the graphics itself, there were also the slogans „Meet the World’s First Artificial Intelligence Magazine Cover” and „And it only took 20 seconds to make”.

Image 2. Cover of Cosmopolitan magazine from June 2022

So are similar models really useful in marketing communication? This was checked by James O’Claire, who tried to create specific marketing creations using Stable Diffusion (Stable Diffusion is a deep learning model released this year, mainly used to generate detailed images based on text descriptions) and discussed the results of his experiment in a text „Can You Use AI to Generate Marketing Creatives?” published on his website - jamesoclaire.com/. In his opinion, the use of machine learning models that generate images based on the entered text in marketing has its pros and cons.

The great advantage of this solution is time - AI can return us many images on a given topic in just a few seconds with almost no human factor. So the labor costs are really low. Professional programs allow us to define certain parameters of created graphics, thanks to which we can have a complite creation in a very short time. As a result, something that a graphic designer (or a whole team of graphic designers) has been doing for weeks, artificial intelligence models will do practically right away. However, we must take into account that the machine thinks differently and its work doesn’t have to coincide with our vision. Although the operation of AI Text to image models is based on an algorithm created by humans, they lack features typical for people, such as cultural or social influences, exploring the world through the senses of taste, smell or touch, feeling emotions, etc. Therefore, the results obtained from queries can be completely abstract for us and therefore extremely interesting. This will be an invaluable experience during the brainstorming that usually accompanies the creation of a campaign - provided that the marketing team is open to new ideas. Otherwise, however, created images may differ significantly from what we wanted to achieve, and the appropriate orientation of the AI model may not be easy, in fact we don’t known whether it will be possible at all. As James O’Claire himself writes, „To me, it felt like the less precise control I tried to exert, the better the results”.

Let’s also take into account that visualizations created using text-to-image algorithms are random - we don’t improve the current search, but create a completely new one. So we can’t count on repeatability. In addition, programs of this type operate on the basis of certain databases - colors and details of graphics won’t perfectly match those used by a given brand (unless the database is very carefully selected using only specific data). After all, obtained results won’t be flexible - once gained, they are of course a complete graphic, but adapting them to other dimensions, resolution or orientation can be very difficult, and the materials used in marketing creations should be susceptible to any modifications.

Summing up, artificial intelligence models may not be the best solution if we want to achieve a specific effect, but they will be an irreplaceable source of inspiration. As James O’Claire wrote, „I see Stable Diffusion as a boon, the clip-art of our generation bringing art closer to everyone and the tools for marketers to create better experience ads”.

Although all arts created by artificial intelligence are unique and special, not all of them return results at the same level. Different programs related to them use different algorithms - so it isn’t surprising that one model works in a certain way and another goes in a completely different direction. The material stored in its database also has a special impact on the style of a given AI model - because it defines what the machine has to work with. According to Tomasz Rożek, the founder of the foundation „Nauka. To Lubię” („Science. This I like”) - „You could say that there is a race between the creators and trainers of such algorithms. The race which algorithm will be better, more creative, or which one will create more original graphics or animations”. Marketing as one of the most dynamic industries will certainly draw from this new source of graphics created by machines. In our opinion, it can therefore be safely assumed that in the not too distant future there will be programs using AI text-to-image models dedicated strictly to marketers, and who knows, maybe even marketing teams will be expanded by specialists who professionally support AI models.